AI Performance

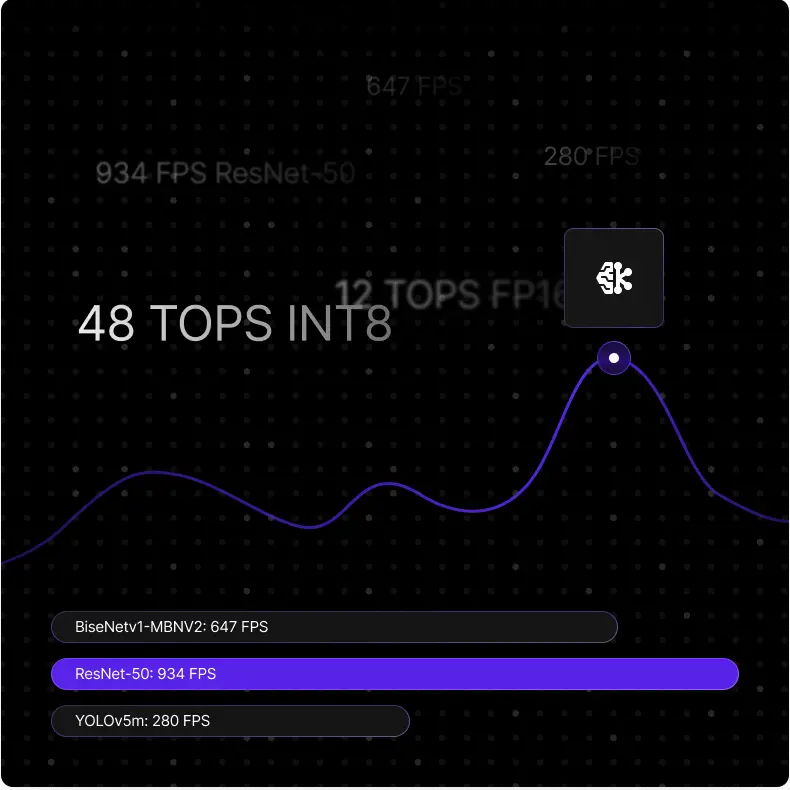

48 TOPS INT8 / 12 TOPS FP16:

Handle complex neural models effortlessly, from object detection to segmentation.

Run YOLO, ResNet, MobileNet (90+Models):

Optimized for high throughput and low latency.

Inference Speed:

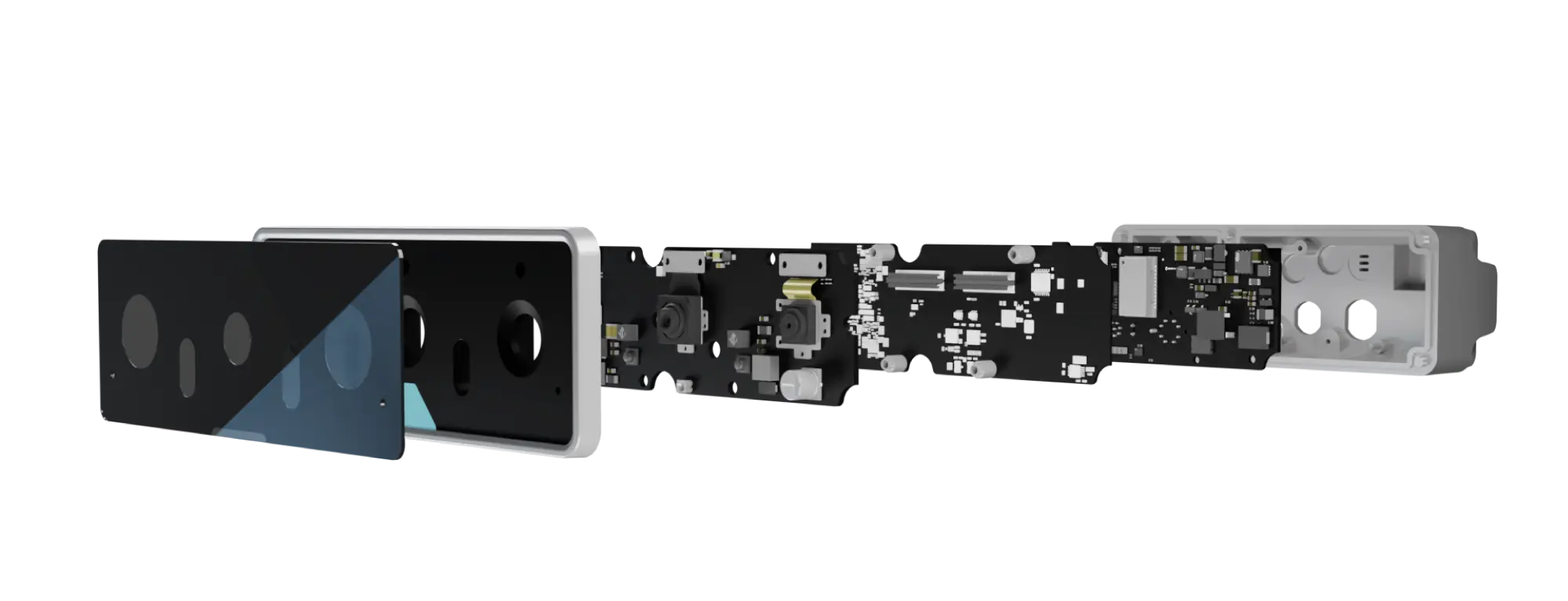

Luxonis devices deliver unparalleled AI performance at the edge, combining neural inference, stereo depth, and real-time vision in one compact package.

Luxonis devices deliver unparalleled AI performance at the edge, combining neural inference, stereo depth, and real-time vision in one compact package.

48 TOPS INT8 / 12 TOPS FP16:

Handle complex neural models effortlessly, from object detection to segmentation.

Run YOLO, ResNet, MobileNet (90+Models):

Optimized for high throughput and low latency.

Inference Speed:

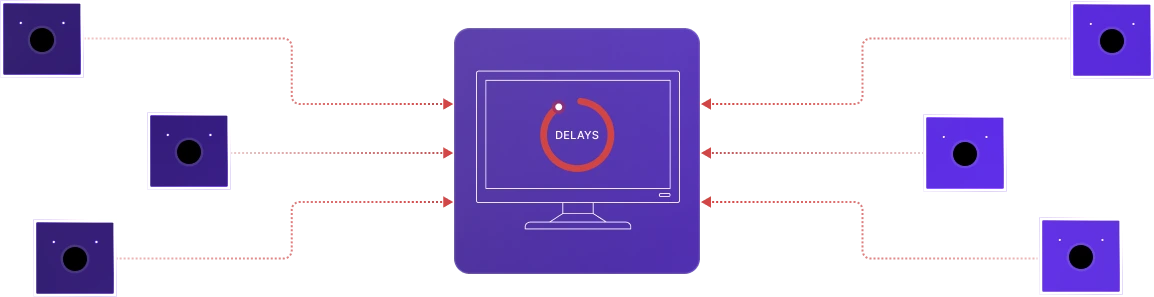

Why Edge Inference Matters

Centralized Local Compute

Luxonis Edge Inference

Reduced Cloud Dependency

Minimized Central Compute Load

Uninterrupted Operation

Optimized Latency and Real-Time Response

Energy Efficiency

Scalability

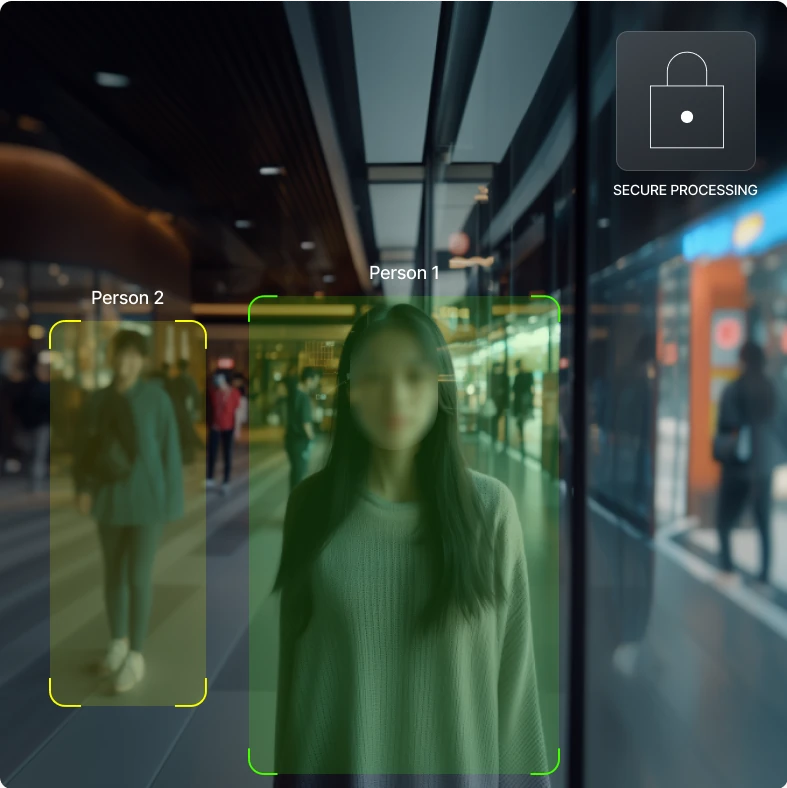

In today’s world, safeguarding personal and sensitive data is critical. Luxonis devices are built with privacy in mind, ensuring that Personally Identifiable Information (PII) never leaves the device unless explicitly intended. By processing data locally, our devices help you stay compliant with stringent privacy regulations, including GDPR and CCPA.

Process data locally, anonymize PII, and ensure compliance with privacy regulations like GDPR and CCPA.

On-Device Processing

All data is processed directly on the device, reducing the need to transmit sensitive information to external servers.

PII Protection

Compliance-First Design

Technical Features for Developers

High-accuracy depth mapping with up to 1/32 subpixel precision.

Runs INT8/FP16 models optimized for edge devices, enabling high throughput with low power consumption.

Supports TensorFlow, PyTorch, ONNX, and other model formats.

Simultaneously processes stereo depth, object detection, and multiple parallel video streams without external compute.

Operates at 5-25W

Eliminates the need for additional cooling or high-wattage power supplies.

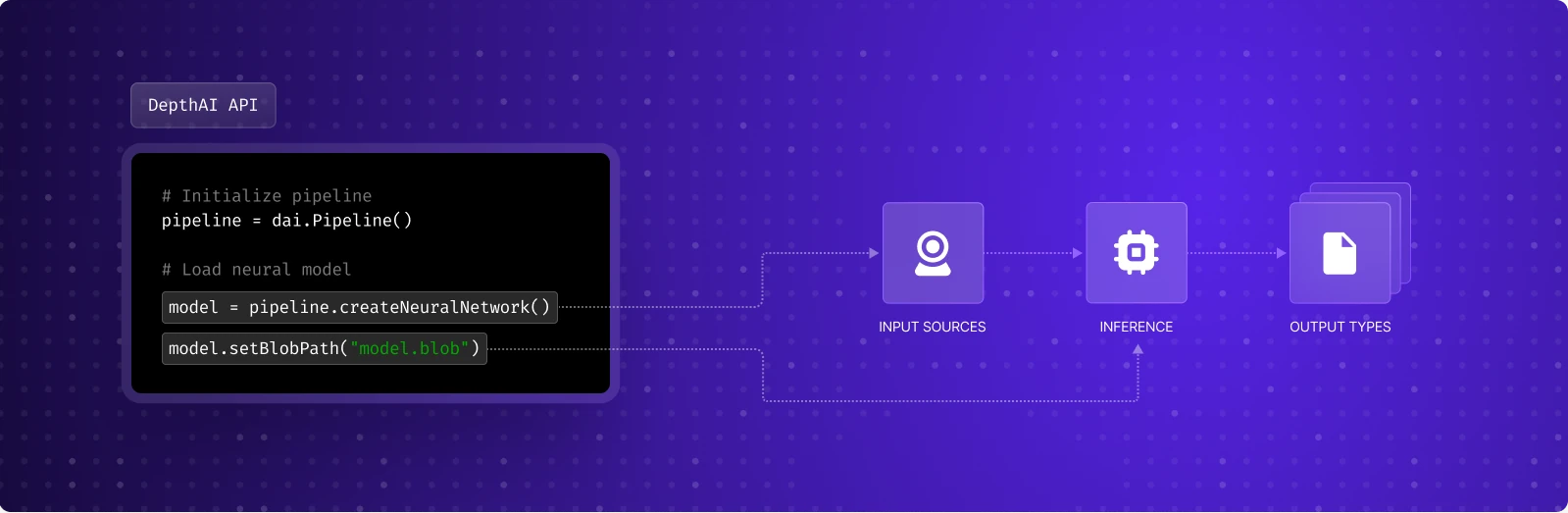

Fine-tune performance and precision settings directly via DepthAI API.

Edge vs. Centralized AI

Feature | Traditional (Central Compute) | Luxonis Edge Inference |

Latency | High (network-dependent) | Low (on-device) |

Bandwidth Use | High (data streamed to/from cloud or central processing) | Minimal (local processing) |

Energy Efficiency | High system-wide power consumption | Optimized for local efficiency |

Privacy | Data streamed externally | Data processed locally |

Reliability | Dependent on network/cloud availability | Independent, continuous operation |